If you are looking for a cloud-based big data analytics platform, consider Databricks. This platform uses the Spark data processing engine to eliminate redundancy and supports R, Scala, Python, and SQL languages. In this article, we will review what Databricks offers and how it can benefit your business.

Databricks is a cloud-based platform for big data analytics

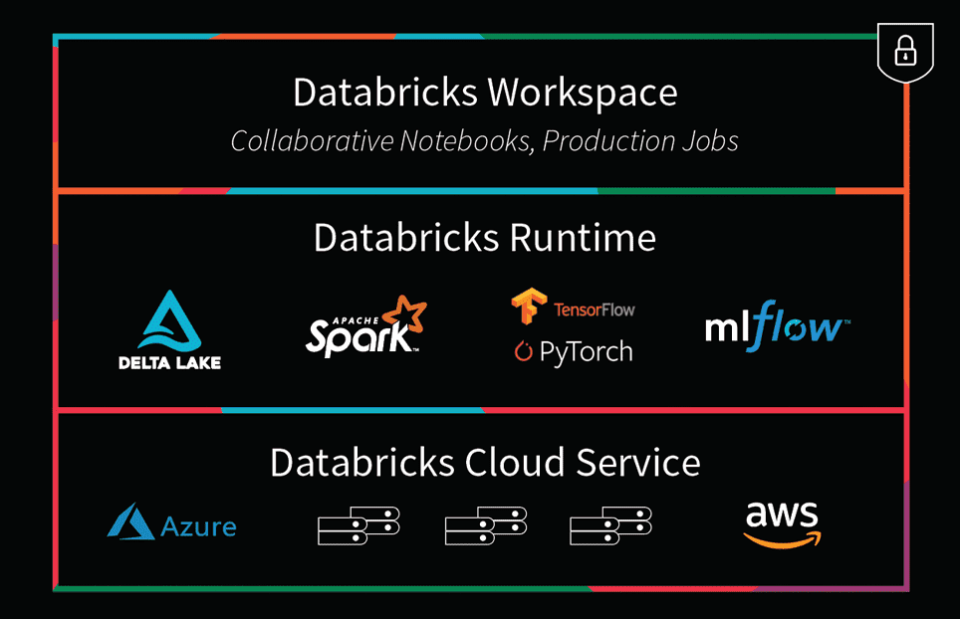

Databricks is a cloud-computing platform that allows users to perform big data analysis. Its processing capability includes reading and writing data, calculating results, and combining data from various sources. It also provides tools for various programming languages, including Python and Java. Moreover, Databricks is compatible with a variety of data storage providers and platforms. It supports tools such as IntelliJ, PyCharm, and Visual Studio Code. It also offers integrations with third-party solutions for data preparation, machine learning, and data ingestion.

Databricks runs on cloud-based computing environments like AWS, Google Cloud Platform, and Azure. It is easy to set up and use, and can be integrated with existing cloud native applications. It also provides a web-based user interface for managing the workspace. Users do not need to learn about the cloud infrastructure beneath the platform, which is a great advantage for enterprises that want to take their big data analytics to the next level.

It is an open-source platform that combines four popular open-source tools for big data analytics. Users can run any Python, R, SQL, or Java scripts using its platform. Its platform is flexible, fast, and scales up to massive data sets.

Databricks’ core architecture runs on Apache Spark, an open source analytics engine with a heavy focus on data parallelism. It uses a driver/worker node architecture to leverage many servers as one. Each worker/executor node works on a piece-by-piece job and sends its output to the main server driver. The main server then assembles the final output.

Databricks’ community page is an excellent resource for learning how to use the platform. The community page offers helpful articles and links to other users. If you’re experiencing technical difficulties, you can also post them on the Databricks community page. In addition, you can read the Databricks Data Engineering blog for insights on using the platform.

It uses Spark as its data processing engine

As one of the leading big data solutions, Databricks uses Apache Spark as its core processing engine. Unlike many other solutions for big data, Databricks does not require on-premises infrastructure. It uses cloud providers like AWS for compute clusters and Azure VMs for data storage. It also doesn’t store data on its own; instead, it uses native cloud storage, such as AWS S3 and Azure Data Lake Storage Gen2.

Spark has gained widespread adoption since its launch in 2013. Databricks, the company that created it, donated the project to the Apache Software Foundation and worked with the community to release Apache Spark 1.0 in May 2014. This release was widely welcomed by the community and established a steady momentum for frequent releases. The Spark project now includes contributions from over 100 commercial vendors and is optimized for large-scale distributed data processing.

Databricks’ managed cloud platform enables businesses to scale quickly and easily. It eliminates the need for on-premises hardware and complex cluster management processes. With Databricks, you can specify how many clusters you need and Databricks will manage them.

Databricks also uses Spark as its data processing engine. The service streamlines the process of exploring data, prototyping in Spark, and running data-driven applications. It offers notebooks for users to document their progress in real-time. It also offers managed services and an integrated machine learning platform.

Spark is an open source framework for big data processing. It supports multiple databases and is widely used by large enterprises. Its high-level API and consistent architect model make it ideal for a variety of use cases.

It eliminates data redundancy

Whether you need to analyze the past, predict future outcomes, or make better decisions, Databricks can help you get your data where it needs to be. This data warehouse and analytics platform is built on Apache Spark, which is optimized for Cloud environments. Its flexible and scalable architecture enables users to run small or large-scale Spark jobs without compromising performance. Databricks also has a flexible design that lets you connect to a wide range of data sources. The platform supports Avro files and MongoDB, and can run queries against multiple sources simultaneously.

Data redundancy can be accidental or intentional. Accidental data redundancy is caused by complex coding or inefficient data management. Intentional data redundancy is done on purpose to increase data security and consistency. Moreover, multiple instances of data can be useful for disaster recovery and quality checks. A central field can also be used to update redundant data.

When there is data redundancy, it results in a larger database. This can cause problems such as slower loading and employee frustration. Larger databases also increase storage costs. This can be a huge problem for organizations. This type of database system can be costly, so it is important to find a way to reduce its size.

Databricks is a cloud-based data platform made by the creators of Apache Spark. Its open source framework provides a variety of data capabilities, including SparkML and SparkSQL. This platform also features a new integrated caching layer to help speed up data processing. It can also help build predictive models using SparkML. Moreover, Databricks is compatible with Microsoft Azure, Amazon Web Services, and Google Cloud Platform.

It supports R, Scala, Python and SQL languages

With Databricks, you can create an interactive dashboard that includes existing code, images and outputs, and you can even use code blocks to organise cells. The platform is free and supported by the Databricks university alliance. It is a great option for data scientists and data engineers who are looking to build their own interactive visualizations and visualisations.

Databricks is an open source framework that supports R, Python, and SQL. It includes a runtime based on Apache Spark and tools based on open source technologies, such as Delta Lake and MLflow. These tools enable you to create Unified Data Analytics Platforms that scale across ecosystems and languages. With Databricks, you can write, read and analyze data in your preferred language, using R, Python, or SQL.

Databricks is open source and works with most major cloud platforms, including AWS and Azure. It is built on the open source Spark framework and is supported by Azure Blob storage, Azure Data Lake, and Azure Data Factory. As an open source project, Databricks is well documented and offers extensive support.

In addition to R, Scala, and Python, Databricks is also compatible with many other services. For example, it supports Azure Storage, AWS S3, MongoDB, SQL databases, and Apache Kafka. Databricks is a collaborative environment, and it allows you to share workspaces with other data scientists. This can increase the efficiency of data analysis.

Databricks is a unified analytics framework that eliminates silos among data scientists and data engineers. Its goal is to make data processing easy and powerful. It is the brainchild of Apache Spark creators and is a platform-agnostic framework that is designed to scale.

It integrates with third-party solutions

The Databricks platform supports a wide range of data sources and integrations with third-party applications. It can read and write data from a variety of sources and supports a range of data formats. The platform also supports various developer tools such as IDEs and connectors. Developers can also take advantage of IaC platforms and REST APIs. The platform also offers support for a variety of authentication methods and is compatible with a range of databases.

Databricks’ first offering was on AWS. It was only available on a multi-year contract basis, and users were required to use the Databricks console to manage data. Users also had to manage separate accounts and budgets. In addition, the platform is completely separate from the other AWS tools and services.

Databricks’ underlying technology, Apache Spark, is ideal for batch, streaming, and machine learning workloads. But it’s not well suited for full data connectors and visual data pipeline authoring. It’s expected to compete with rival data warehouse platforms such as Amazon Redshift and Microsoft Azure.

Databricks also offers a validated ecosystem of partner technologies that make building and deploying data products much easier. As part of the ecosystem, Databricks offers the Rivery platform, which has 180 prebuilt data connectors. Customers can also use the Starter Kits to quickly launch data models and build their ML/AI workflows.

Managing cloud infrastructure can be daunting, even for the most experienced DevOps engineers. Adding and managing inter-cloud resources is complicated, and resolving dependencies is a time-consuming task. Fortunately, Databricks Cloud Automation makes it easy and fast.