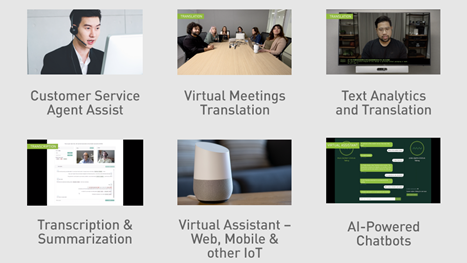

NVIDIA Riva is a GPU-accelerated software development kit (SDK) used for building state-of-the-art speech AI applications such as call centers, digital assistants, and video conference transcriptions.

ASR

NVIDIA Riva provides an end-to-end workflow for creating speech AI applications, from audio transcription and virtual assistants with pre-configured ASR pipelines with acoustic models, Helm Charts and Jupyter Notebooks all the way to audio transcription workflows and more.

AI workflows also facilitate a faster and simpler method for building and deploying voice recognition in call centers, creating better customer experiences with minimal latency and 10x higher inference throughput. NVIDIA Riva features a GPU-accelerated compute pipeline optimized to deliver optimal performance and accuracy for both streaming and offline recognition use cases.

To facilitate fast and efficient inference using NVIDIA Riva, the ASR process must be configured with an n-gram language model. This can be accomplished either through providing one as part of the ASR training phase or by importing one from NVIDIA NeMo platform.

Once an n-gram language model has been trained, its acoustic model and transcript model can be generated and compared using Riva greedy decoder. Once selected, a best transcript hypothesis will be returned back to the application for analysis.

ASR pipelines can be configured to generate multiple transcript hypotheses for every utterance, providing greater performance improvements when an individual utterance proves challenging to recognize. To do this, pass the –max_supported_transcripts=N option when calling riva-build command.

NVIDIA Riva provides support for several acoustic models to perform ASR inference and generation. For more information on installing and configuring these models, please refer to the Riva documentation on acoustic models.

TTS

NVIDIA Riva provides developers with everything they need to create multilingual voice and text-to-speech (TTS) AI applications for cloud, data center, edge devices and embedded systems. It features an ASR/TTS pipeline as well as preconfigured language models for real-time transcription, virtual assistants and accessibility solutions. Enjoy unlimited usage across all clouds as well as access to NVIDIA AI experts as long-term support with production deployments when choosing the premium edition of NVIDIA Riva; additionally there’s also a free 90 day trial available as part of the NVIDIA Developer Program or containerized application which runs on Linux ARM64 GPU-based devices. Contact IoT Worlds Team for any support.

Riva’s TTS engine employs a two-stage pipeline that turns text into audio sequence. In its initial stage, known as generative model, an audio waveform is generated based on frame-level acoustic representation of input text; then in stage two (concatenative model), audio waveform is converted to output speech sequence using concatenative model technology. Riva TTS supports numerous languages and voices and allows you to customize synthesis characteristics using SSML such as pitch rate and pronunciation via phoneme pronunciation options.

Riva offers two TTS modes. In batch mode, requests can be made by sending text to Riva which then processes and returns audio in an efficient manner. When used in streaming mode, Riva returns audio chunks as they are generated, further decreasing latency (time to first speech).

The TTS pipeline employs a neural vocoder based on WaveGlow: A flow-based Generative Network for Text to Speech paper to convert frame-level acoustic features into audio rates sounds sequences, concatenating an acoustic model with its synthesization model to produce output audio stream. An SSML configuration file specifies model parameters while also providing customizable pitch, rate, pronunciation through phonemes etc for maximum customizability of TTS output streams.

NMT

NVIDIA Riva offers the fastest, most flexible way to build and deploy speech AI, combining 10 years of NVIDIA innovation in artificial intelligence across hardware, model architectures, training techniques, inference optimizations and deployment solutions. NVIDIA Riva supports multiple languages allowing developers to easily customize and deploy state-of-the-art NMT models with text-to-speech (TTS) or text-to-mouth (TTM) capabilities enabling high-fidelity conversational AI with natural human-like speech quality. NVIDIA TensorRT optimizations as well as Triton(tm) inference software enable real time performance with real time performance results.

NVIDIA Riva offers pretrained NMT models for both ASR and TTS that have been trained on large datasets to achieve outstanding performance compared to traditional approaches. Furthermore, you can fine-tune these models using NVIDIA NeMo(tm), speeding up development of domain-specific speech AI systems.

NVIDIA Riva Quick Start scripts offer an efficient way to get up and running quickly with this tool, offering an automated step-by-step process for deploying Riva services in Docker containers locally or Kubernetes clusters via an Helm chart that offers push button deployment of speech AI services from NVIDIA Riva.

Riva offers support for NVIDIA Jetson AGX Xavier platforms used for embedded computing use cases, along with data center and embedded compatibility software and hardware versions available today. To view this support matrix in full please see here. Are you interested to buy NVIDIA hardware? Contact today the IoT Worlds Team.

Riva features an updated version of NVIDIA NGC Speech API with simplified API that supports ASR, TTS and NMT models. Furthermore, Riva supports existing NGC Speech API versions so users can utilize existing models from NGC.

Pretrained Models

NVIDIA Riva offers pre-trained speech, NLP, and TTS models that can speed up application development. Enjoy world-class accuracy and real-time performance of conversational AI skills using AI workflows and APIs ready for automated transcription, dictation, voice assistant functionality, contact IoT Worlds today. NVIDIA Quadro(tm) GPU Inference Server simplifies development and deployment of speech AI apps using these workflows.

NNPs are at the core of speech AI, providing natural language processing (NLP) functions such as named entity recognition (NER), punctuation analysis and intent classification. NVIDIA Riva provides an end-to-end enterprise framework for building, customizing and deploying large NLP models with billions of parameters at scale for hyperpersonalization purposes.

Riva SDK and NVIDIA vPRTTM inference library make these NLP workflows and APIs available, offering high-performance inference on desktop, mobile devices, and inference servers. In particular, Riva vPRT optimizes its inference engine for NVIDIA GPUs for up to 10-fold faster inference performance compared with traditional inference libraries.

Riva offers an extensive range of features for fine-tuning acoustic and language models to meet the specific requirements of your use case, including word boosting, customizing vocabulary/lexicon composition and normalizing the acoustic model. In addition, pretraining your NNP models can speed up training while improving inference performance with the Train Adapt Optimize (TAO) Toolkit.

Riva offers support for importing and running top-of-the-line NVIDIA NeMo models to enable hyperpersonalization and at-scale deployment of speech AI applications. Pre-trained models are available through NGC, and you can further optimize them with Riva for world-class accuracy when it comes to conversational AI skills.

Deployment

NVIDIA Riva is part of NVIDIA AI Enterprise software platform, designed to expedite development and deployment of production AI. Utilizing GPU-accelerated hardware and software, Riva allows for creation of multilingual speech translation AI applications tailored specifically for your use case, with real-time performance delivered directly into production environments.

Kickstarting Riva is simple. Use the NGC CLI to install Riva services locally for testing or deployment to cloud or edge infrastructure, then download and configure required Docker images and Riva models automatically via NGC instance administrator for instant access to NVIDIA software. Do you need support for that? Contact IoT Worlds today.

Deployment options for NVIDIA AI include local Docker and Kubernetes deployment. A Helm chart is included that automates deployment into clusters for convenient push-button deployment; this is available in the NGC Helm Repository under NVIDIA AI.

Riva comes equipped with pre-trained automatic speech recognition (ASR) and text-to-speech (TTS) models that are trained on public, open-source datasets for English. You can further fine-tune these models using the NVIDIA TAO Toolkit for optimal results.

T-Mobile employs NVIDIA Riva ASR to transcribe customer calls into text for its call center agents to read back, while Artisight developed a smart hospital solution using voice-enabled kiosks equipped with NVIDIA Riva TTS to ensure accurate pronunciation, tone, and accent for each language. Both solutions can also be seamlessly integrated into NVIDIA Fleet Command hybrid-cloud platform to securely deploy, manage, and scale AI across dozens of servers or edge devices simultaneously – this early access platform is currently available.

Are you ready to develop and deploy your IoT AI solution with IoT Worlds? Contact us today.