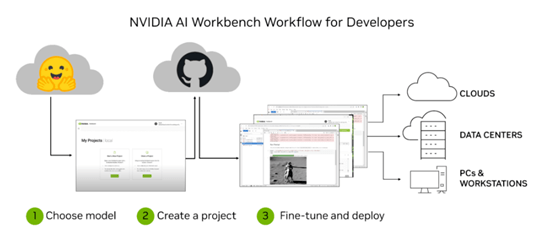

NVIDIA AI Workbench is a unified developer toolkit that covers every stage of AI lifecycle development. It enables developers to quickly customize and deploy generative models using either Windows PCs or workstations running Linux operating systems.

Connected to popular repositories such as GitHub and Hugging Face, as well as Nvidia’s enterprise web portal NVIDIA NGC, NGC provides access to essential models, frameworks and software development kits in one comprehensive developer toolkit for enterprises.

What is nvidia AI workbench?

Nvidia AI Workbench is an essential tool that streamlines the creation process for artificial intelligence models. It allows developers to easily develop cost-effective, scalable generative models and deploy them quickly into any environment using NVIDIA GPU power and an easy-to-use developer platform, providing all levels of developers an opportunity to rapidly test generative AI models on PC or workstation, then scale them across datacenters, public clouds or NVIDIA DGX Cloud with ease. Furthermore, its user friendly interface enables team collaboration on projects easily among team members working on shared projects.

Nvidia unveiled this innovative platform at SIGGRAPH 2023, an annual computer graphics conference dedicated to artificial intelligence. Nvidia AI Workbench is an easy-to-use developer toolset designed for creating, testing and customizing pre-trained generative AI models and LLMs on any desktop or workstation, then scaling them out across data centers, public clouds or NVIDIA DGX Cloud environments.

This platform provides a seamless development experience for both experts and novices, eliminating complex technical tasks that often stymie AI projects. Developers can set up containers and developer environments on Windows and Linux machines with just one click, access GPU-optimized frameworks via JupyterLab and VS Code and easily scale to various infrastructures from multi-GPU desktops through high-end workstations to NVIDIA RTX servers without complex configuration procedures or lengthy waiting lists.

AI Workbench not only speeds up training processes but also makes managing complex models simpler for developers by offering integrated model management and visualization features. Furthermore, collaboration across platforms is enhanced via its seamless deployment process; additionally it supports TensorFlow and PyTorch frameworks allowing developers to choose their preferred tools.

Success of any generative AI project relies on its deployment in real-world applications, but deploying models into production systems can be an intensive and time-consuming process that involves multiple steps from data preparation to model tuning. Furthermore, many developers lack the necessary knowledge or expertise for this task – NVIDIA AI Workbench offers solutions to mitigate these difficulties with tools designed specifically to accelerate enterprise generative AI development processes.

Streamlined generative model customization

Nvidia AI Workbench stands out as a valuable enterprise solution by facilitating simplified generative model customization, offering faster training and deployment time, as well as making fine-tuning existing models simpler for increased accuracy and performance. Enterprise users will especially value this feature of AI Workbench, which reduces training and deployment time, giving developers more time to focus on AI solutions rather than managing deployment workflows manually. Furthermore, fine-tuning existing models becomes simpler too, leading to higher accuracy and performance gains over time.

AI Workbench’s main function is accelerating model training, but it also offers several other features that can enhance productivity and simplify workflows. It provides a centralized workspace to consolidate multiple frameworks and libraries into a single tool and it can train and test models on desktop PCs, GPU servers, cloud instances, Nvidia DGX systems as well as helping developers automate and optimize production deployments.

AI development can be complex and require multiple steps, from data preparation to model selection and architecture design. From data prep to training models to tuning complex models efficiently – AI solutions often take more time and resources to develop than their counterparts in traditional computer science environments.

Nvidia AI Workbench was designed to simplify every stage of developing artificial intelligence applications from data preparation through deployment. It features a unified workspace that unifies popular deep learning frameworks like TensorFlow and PyTorch so developers can select their tools of choice; this flexibility recognizes that different AI professionals may have differing preferences and needs.

AI Enterprise 4.0 of AI Workbench offers business-ready tools for deploying generative models in the cloud, including Nvidia NeMo (a cloud-native framework providing end-to-end support for building, customizing and deploying large language model [LLM] applications) and Nvidia Triton Management Service which automates and optimizes production deployments.

Nvidia AI Workbench is an AI development platform that harnesses GPU power in an easily accessible workspace environment. It can be utilized across different platforms – desktop PCs and workstations with Nvidia RTX graphics cards are suitable – as well as deployed to cloud and Nvidia DGX systems to provide high-performance solutions for training and fine-tuning of artificial intelligence models.

Deployment of generative models

Nvidia AI Workbench was designed to streamline the creation, testing, and deployment of generative models. The platform supports all steps in this process – from data collection and preparation through to modeling simulation visualization – as well as collaborative projects from any machine or environment and seamless integration across platforms. As a powerful business tool it enables companies to speed up generative model development and deployment processes more rapidly.

The platform will enable developers to use their GPUs for building and fine-tuning generative AI models before they are deployed on data centers or public clouds, saving money on infrastructure costs while mitigating any security or performance risks that might otherwise arise. Customizing their model will be simple by taking advantage of Nvidia RTX graphics processing units.

Manuvir Das, Nvidia’s Vice President of Enterprise Computing explained, AI Workbench provides “an easy path for cross-organizational teams to create the AI-powered applications which have rapidly become essential in modern business.” Developers can compile all necessary models, frameworks and software development kits into a single developer toolkit before iteratively refining models against proprietary data sets before scaling their workload across laptops, GPU servers, cloud instances and Nvidia DGX Cloud instances.

Generative AI is an incredible technology with immense potential for business transformation, but creating and deploying models can be complex and time consuming. To accelerate its adoption, businesses should provide developers with access to appropriate tools and infrastructure so they can easily develop custom generative models in production environments and collaborate efficiently on collaboration, deployment, and scaling processes.

Nvidia AI Workbench will allow developers to rapidly build, test and customize pretrained models from popular repositories such as Hugging Face and GitHub on their PC or workstation before scaling them out into any data center, public cloud or DGX Cloud environment. It also integrates seamlessly with AWS Marketplace, Microsoft Azure Marketplace and Oracle Cloud Marketplace so developers can more conveniently purchase Nvidia DGX graphics processing units and other products.

Streamlined model validation

Nvidia recently unveiled AI Workbench, a platform to assist developers in customizing and deploying generative models. The AI Workbench makes creating large models easier by connecting to popular repositories; users can also utilize this tool for fine-tuning generative models using proprietary data.

NVIDIA VP of enterprise computing Manuvir Das has described NVIDIA’s AI Workbench as “a powerful solution” to the complex challenge of customizing large-scale AI projects at an enterprise level. It offers developers a centralized platform from which they can build and test AI-based apps locally or in the cloud – supporting JupyterLab, VS Code and containerized development environments as well as self-hosted registries and Git servers – for efficient collaboration across multiple machines with high reproducibility and transparency.

AI Workbench will simplify model validation, which is an integral step in developing any machine learning system. Integrating with GitHub, Hugging Face and NVIDIA NGC provides easy access to an abundance of open-source models; perfect for businesses starting out with AI programs as it will quickly test and optimize models without spending hours searching for suitable frameworks or tools.

Additionally, this tool will assist developers to accelerate model creation and testing by enabling them to utilize NVIDIA RTX PCs or workstations for creation and testing purposes. The platform automatically recognizes NVIDIA GPUs in these machines and adjusts performance settings accordingly; additionally, users will be able to collaborate more easily on multi-site projects by easily moving work from machine to machine.

Whoever is interested can register on NVIDIA’s website for early access of AI Workbench, and will be notified as soon as the tool becomes available. AI Workbench is part of NVIDIA’s commitment to accelerate AI for humanity – its combination of industry-leading hardware and advanced software offers developers a platform which facilitates AI solutions that transform industries and human experiences.

Do you need any support to develop your AI IoT application? Contact us today.